Content APIs

Manually manage the content in your search index or activate a crawler to manage it for you

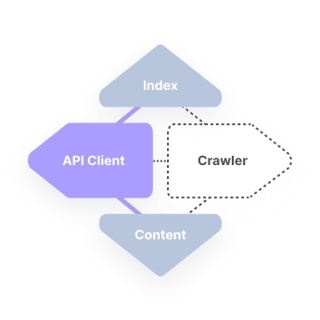

Managing your content

While implementing search, it's important to consider how content is stored in your search index and how that content can be managed over time. You might already know that the indexed content determines how pages are shown as search results, but it also has an impact on other aspects of the system like how you can filter search results and customize their relevance.

In many cases, search content is stored and managed automatically by a Cludo crawler configured in the MyCludo app. Some Cludo users may require a more custom or on-demand way to manage content, however. Those users can access the Crawler API or the Index API.

Authenticating API requests

Before you begin, we suggest you check out the documentation on authentication. Your unique authentication can be used in the Postman and documentation examples linked below.

Crawler API

The Crawler API provides an on-demand way to activate a crawler and direct it to index (or re-index) a specific webpage or collection of webpages. Once a request is made to the Crawler API, a crawler will begin to visit any specified webpages within 5-10 minutes.

When is this useful?

Some search content is time-sensitive: newly published news articles or important announcements often need to appear in search results as immediately as possible. The Crawler API provides a way to index time-sensitive webpages as soon as they are available.

API documentation | Postman collection

Index API

When content is stored in an index, it is broken into a collection of data fields that represent a unique page or document. For example, webpages are usually represented by a:

- Title field

- Description field

- Unique ID field (generally a URL)

- Any other custom fields like Published date or Category

The Index API provides a way to manage exactly what is stored for each of these data fields in a search index.

When is this useful?

Crawlers are simple to use and often a satisfactory way to get a website indexed, but they do come with some limitations. Crawlers require that any content that needs to be indexed be exposed on the client-side of the website. They also sometimes require configured rules that guide the crawler to find content for specific fields. Any mismatch between the rules and the content can lead to inaccurate data in a search index. The Index API bypasses these limitations and allows complete control over what content ends up in a search index, even if that content isn't actually exposed in a website.